You can optimize caching mechanisms for high-throughput inference by using an LRU (Least Recently Used) cache to store frequently accessed model outputs and avoid redundant computations.

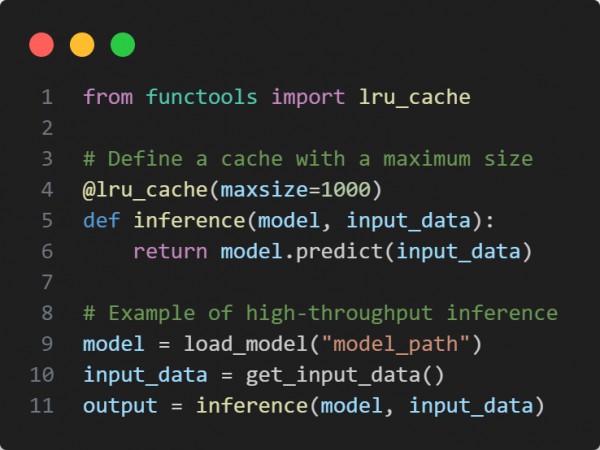

Here is the code snippet below:

In the above code, we are using the following key points:

Hence, this caching mechanism minimizes redundant computations, boosting throughput and optimizing performance.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP