To create domain-adapted language models in Julia, you can fine-tune pre-trained models like BERT or GPT-2 on industry-specific data. Use the Transformers.jl library to work with these models.

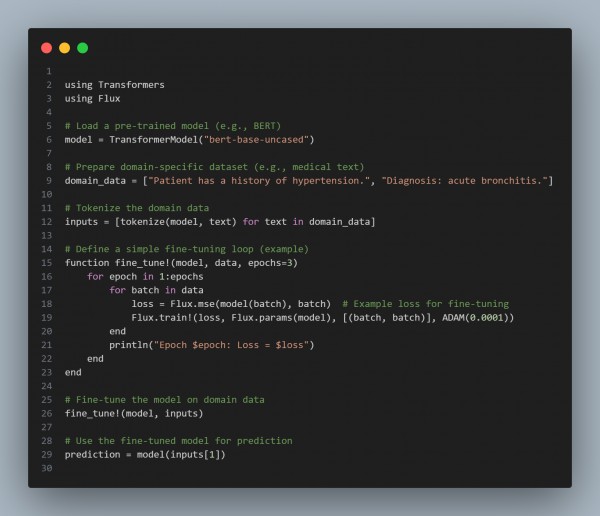

Here is the code you can refer to:

In the above code, we are using the following:

- Pre-trained Model: Load a pre-trained transformer model like BERT.

- Domain-Specific Corpus: Gather a dataset specific to your industry (e.g., medical documents, legal texts).

- Tokenization: Tokenize the domain data into a suitable format.

- Fine-tuning: Train the model further using domain data to adapt it to the specific vocabulary and context.

- Inference: Use the fine-tuned model to generate predictions related to your domain.

Hence, this approach allows you to tailor a general language model to meet the specific needs of niche industries.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP