One of the approach is to return the most recent messages in each run to avoid hitting token limits.

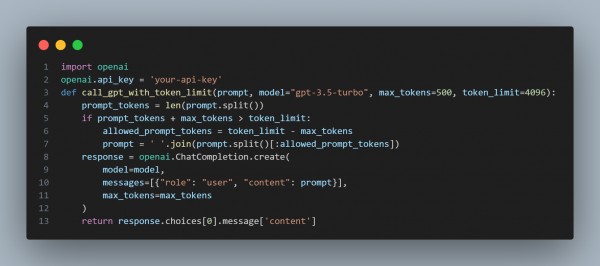

To support this point, here is my optimized reference of code that uses the GPT model and not exceeds token limits.

This example estimates tokens roughly by word count( len( prompt. split())). If the prompt and the desired response exceed the model's token limit[token_limit], the prompt is trimmed to fit within the limit. Therefore, you can use this method to optimize Chatgpt 3/4 API usage.

Hence, in this way, you will be able to avoid token overflow and maintain concise responses within the token limit.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP