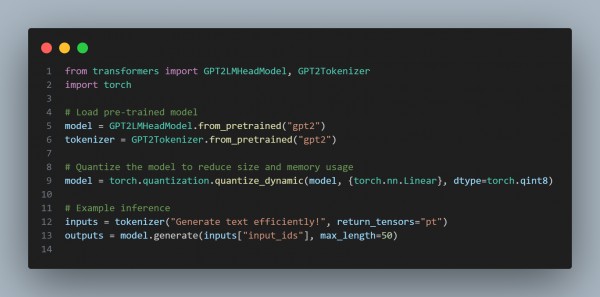

Generative AI models can be optimized for low-bandwidth environments through techniques like model quantization, pruning, and knowledge distillation, which reduce the model's size and computational requirements, enabling efficient use in bandwidth-limited scenarios. Here is the code snippet you can refer to:

In the above code, we are using the following points:

- Quantization: Reduces the precision of model weights to save bandwidth and improve efficiency.

- Model Pruning: Eliminates less important weights to reduce model size.

- Knowledge Distillation: Transfers knowledge from a large model to a smaller one for efficient deployment.

Hence, by referring to the above, you can optimize Gen AI models for low-bandwidth environments.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP