To optimize the scalability of Generative AI models in cloud environments, use auto-scaling with Kubernetes, model sharding, and asynchronous processing to efficiently handle variable workloads.

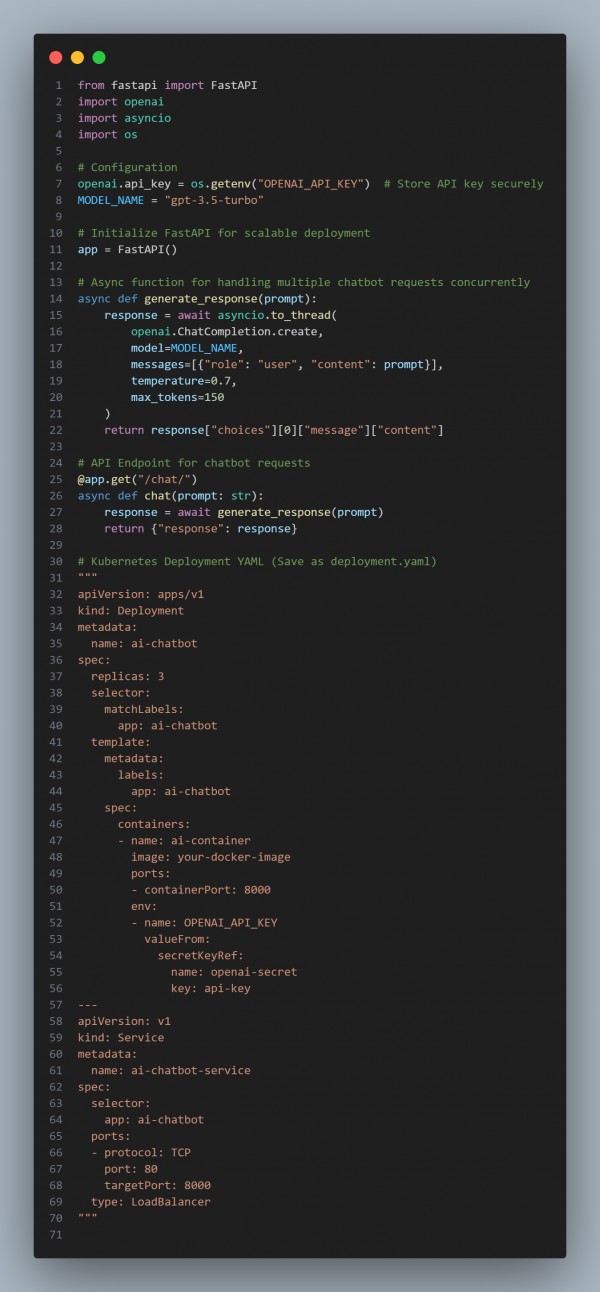

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- FastAPI for High Performance – Uses FastAPI to handle concurrent requests efficiently.

- Asynchronous Processing – Implements asyncio for handling multiple chatbot requests in parallel.

- Kubernetes Auto-Scaling – Deploys in Kubernetes with automatic load balancing and scaling.

- Secure API Key Management – Uses environment variables and Kubernetes secrets for security.

- Containerization with Docker – Ensures portability and easy deployment across cloud environments.

Hence, optimizing Generative AI scalability in cloud environments requires Kubernetes auto-scaling, asynchronous processing, and containerized deployments to efficiently handle large workloads while maintaining performance.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP