You can replace backpropagation with the forward-forward algorithm by leveraging iterative forward passes to adjust model weights based on the maximization of likelihood instead of gradients, enabling training without backpropagation.

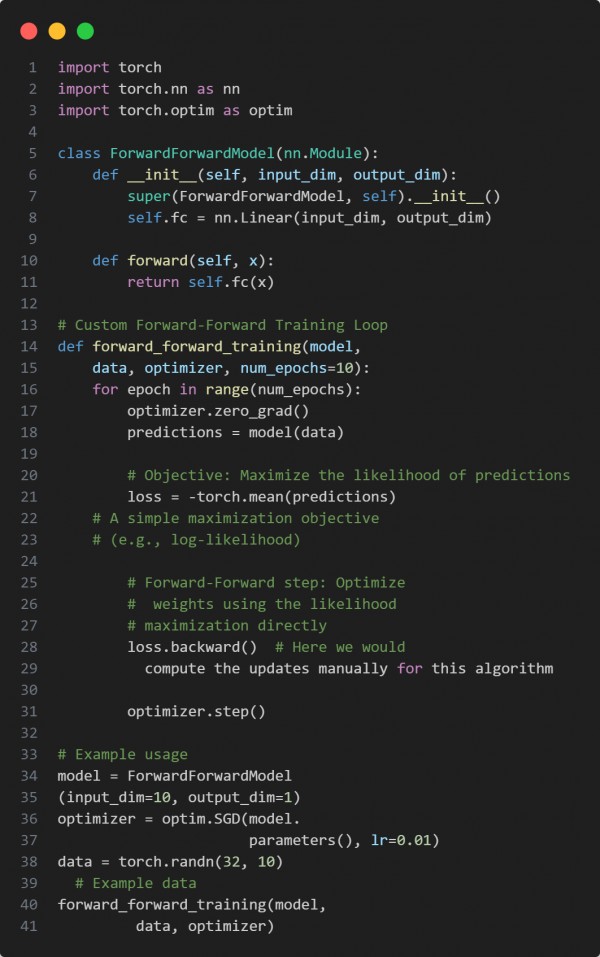

Here is the code snippet below:

In the above code, we are using the following key points:

-

The ForwardForwardModel is a simple feedforward neural network.

-

The custom forward_forward_training loop replaces gradient descent with an iterative process focused on maximizing the likelihood of outputs.

Hence, the Forward-Forward algorithm offers an alternative to backpropagation, relying on forward passes to optimize model parameters by directly maximizing a chosen objective.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP