To avoid exploding gradients in large-scale generative models, you can use gradient clipping, lower the learning rate, or apply batch normalization.

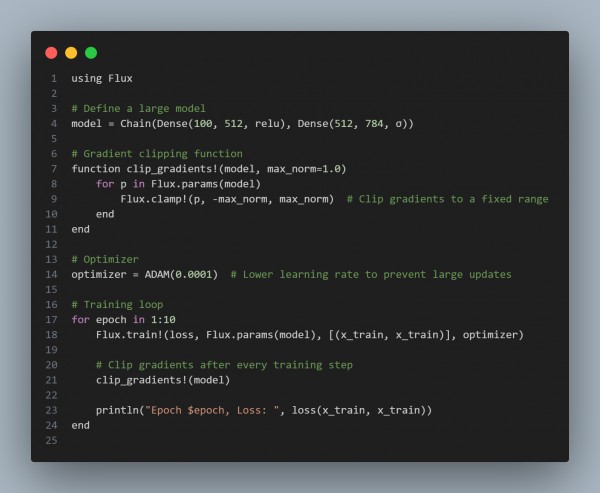

Here is the code snippet you can refer to:

In the code, we are using the following:

- Gradient Clipping: Flux.clamp! ensures gradients are within a specified range to prevent them from exploding.

- Lower Learning Rate: A smaller learning rate helps prevent large gradient updates that can lead to instability.

- Batch Normalization: (Optional) Can also be added to stabilize activations across layers and prevent gradient explosion.

Hence, by referring to the above, you can avoid exploding gradients in large-scale generative models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP