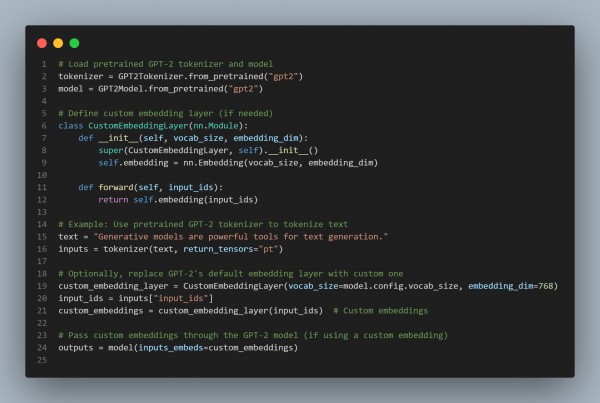

In order to implement embedding layers in generative models like GPT-2 or BERT, you can use the embedding layer to convert input tokens into continuous representations before passing them to the model. Here is the code snippet below you can refer:

In the code above we are using Embedding Layer where the nn.embedding layer converts token IDs into continuous vector representations, GPT-2/BERT Integration where you can integrate a custom embedding layer and pass the embeddings directly to the model using inputs_embeds and Pretrained Models where By default, models like GPT-2 and BERT already have their own embedding layers, but you can replace them with a custom one if needed.

Hence, this approach allows flexibility in adjusting the input token embeddings while leveraging the transformer architecture for generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP