Partitioning a large dataset for multi-host TPU training ensures each host processes a distinct data shard, enabling efficient parallelism and reducing communication overhead.

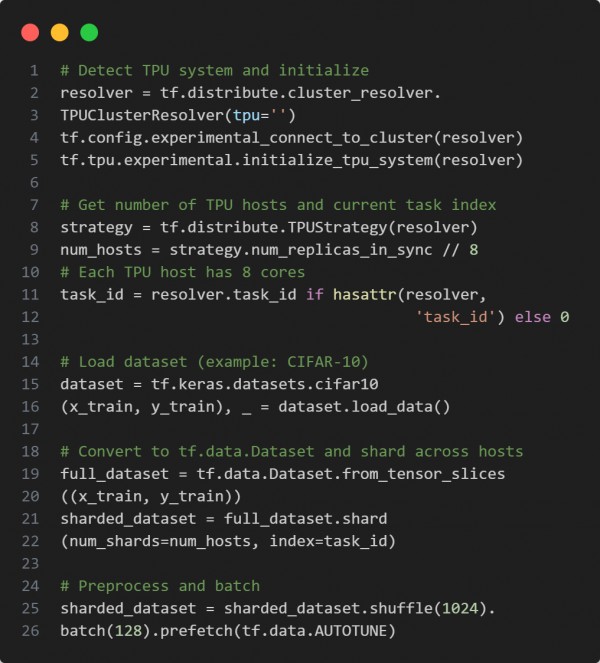

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

-

TPUClusterResolver: Detects TPU configuration and host context.

-

num_hosts: Derived from total replicas to calculate dataset splits.

-

shard(): Ensures non-overlapping data slices per host for training efficiency.

-

task_id: Identifies which shard belongs to the current host.

-

prefetch() + shuffle() ensure optimal input pipeline performance.

Hence, dataset partitioning across TPU hosts enables scalable, efficient multi-host training with independent data processing per worker.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP