Use the Transformer model, which relies on the attention mechanism to translate sentences by encoding the input and decoding it into the target language.

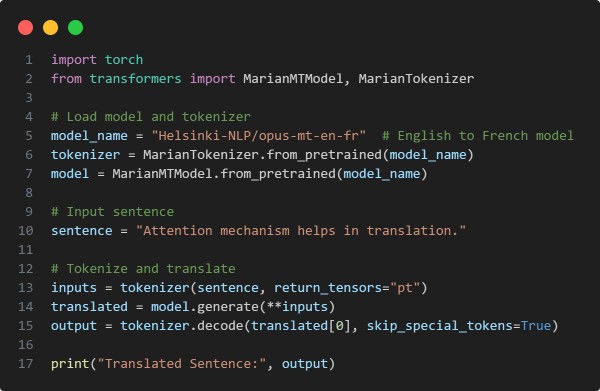

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Uses a pre-trained Transformer model (MarianMT) leveraging the attention mechanism.

- Tokenizes the input sentence for model compatibility.

- Generates translation output using the model's decoding capabilities.

- Decodes back to human-readable text to display the translation.

Hence, the attention mechanism in Transformer models enables efficient sentence translation by learning contextual relationships between words dynamically.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP