To integrate an attention mechanism into the training process with Sentence Transformers, apply self-attention on the embeddings to emphasize important contextual features before classification.

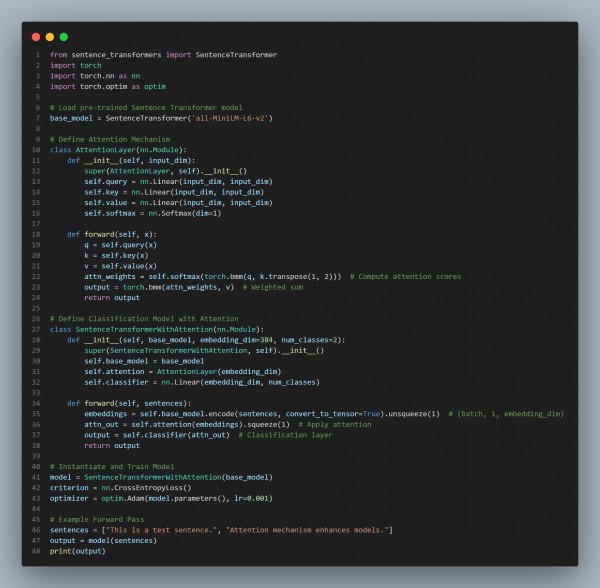

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses Sentence Transformers to generate sentence embeddings.

- Implements a Self-Attention Layer to reweight important features dynamically.

- Integrates Attention into Training before the classification layer.

- Uses PyTorch-based Training Process with Adam optimizer and CrossEntropy loss.

Hence, incorporating an attention mechanism into Sentence Transformers enhances the training process by dynamically emphasizing important contextual features before classification.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP