Modify the attention mechanism by computing alignment scores across all previous timestamps, applying softmax, and weighting the context vectors accordingly before passing them to the decoder.

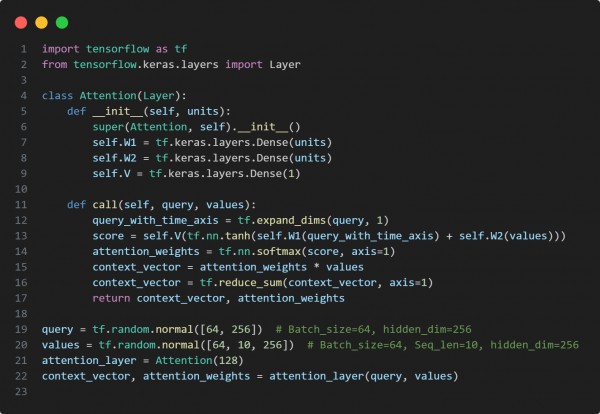

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Computes attention scores using additive scoring with trainable weights.

- Applies softmax to obtain normalized attention weights.

- Computes a weighted sum of context vectors from all previous timestamps.

Hence, modifying the attention mechanism improves context aggregation, enhancing the quality of abstractive text summarization.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP