To measure the semantic consistency of outputs from a text-to-text model, you can use metrics like BLEU, ROUGE, or BERTScore, which compare the generated text to reference texts based on semantic similarity.

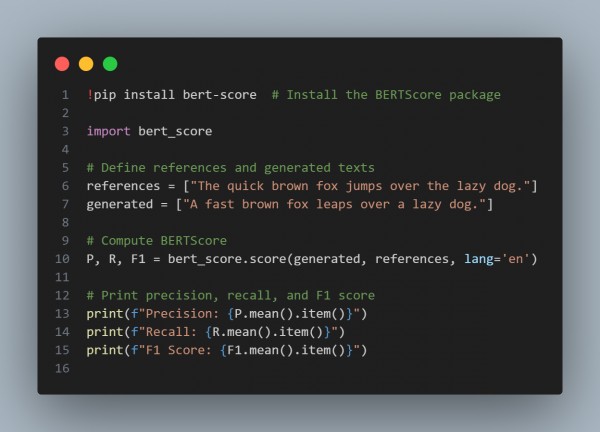

Here is the code snippet you can refer to:

In the above code, we are using the following key points

- BERTScore: Measures semantic similarity using pre-trained BERT embeddings.

- BLEU/ROUGE: Evaluate n-gram overlap but are less focused on semantic meaning.

- High F1 Score: Indicates high semantic consistency between generated and reference texts.

Hence, these metrics help assess whether the generated text accurately conveys the intended meaning.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP