An attention mechanism efficiently generates context vectors at each decoder step in an LSTM-based sequence-to-sequence model by computing dynamic alignment scores between the decoder's current state and all encoder outputs, then applying a weighted sum to focus on relevant information.

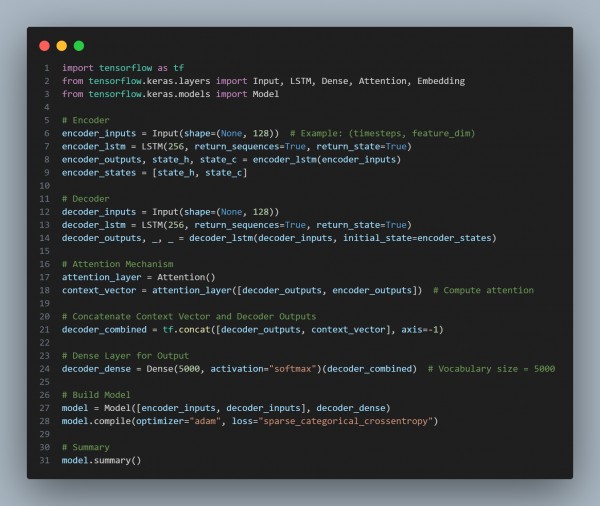

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses an LSTM-based Encoder to generate a sequence of hidden states.

- Uses an LSTM-based Decoder initialized with encoder states.

- Applies an Attention Mechanism at each decoder step for dynamic focus.

- Computes Context Vectors by weighting encoder outputs per decoder timestep.

- Concatenates Attention and Decoder Outputs for better sequence generation.

Hence, incorporating an attention mechanism in an LSTM sequence-to-sequence model enables the decoder to dynamically extract relevant information, improving sequence generation accuracy.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP