The source hidden state in the Attention Mechanism refers to the encoder's output representations, which help the decoder focus on relevant input parts during sequence generation.

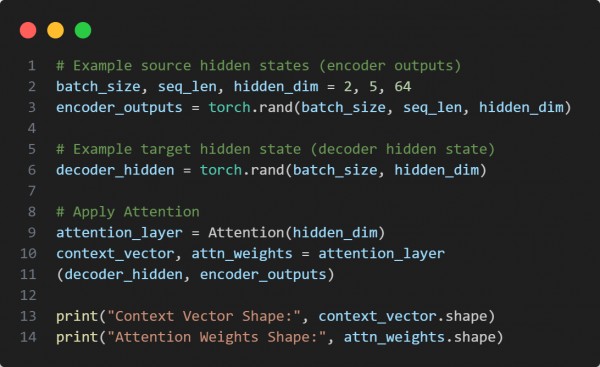

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Defines an Attention layer, computing alignment between the decoder's hidden state and encoder outputs.

- Uses source hidden states (encoder outputs) to calculate attention scores.

- Applies a softmax function to generate attention weights.

- Computes a context vector, which helps the decoder focus on relevant source parts.

Hence, the source hidden state (encoder output) plays a crucial role in the Attention Mechanism by providing context for the decoder, ensuring better sequence generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP