The previous output and hidden states from an LSTM can be used as queries, keys, or values in the attention mechanism to enhance sequence modeling.

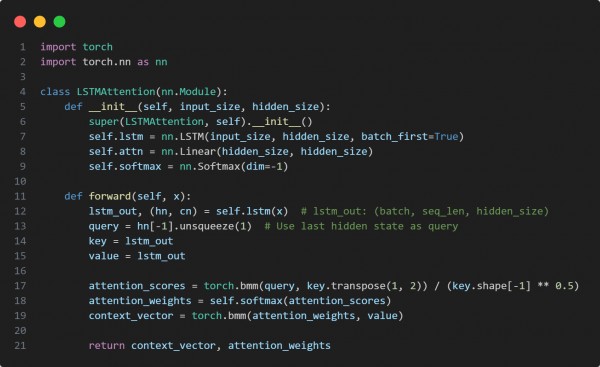

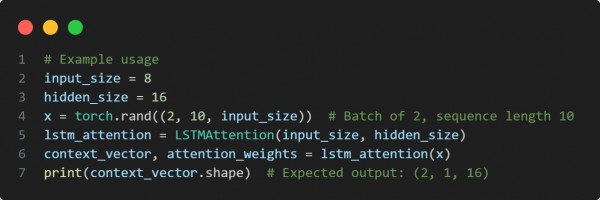

Here is the code snippet you can refer to:

In the above code snippets we are using the following techniques:

- Uses an LSTM to process input sequences and extract hidden states.

- Takes the last hidden state as the query for attention.

- Uses LSTM outputs as keys and values for attention computation.

- Applies scaled dot-product attention with softmax normalization.

- Produces a context vector capturing important sequential information.

Hence, integrating LSTM outputs with attention allows the model to focus on relevant past information, improving sequence understanding and decision-making.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP