The attention mechanism in a neural network can generate heatmaps by highlighting important regions in the input image, aiding in interpretability and focus.

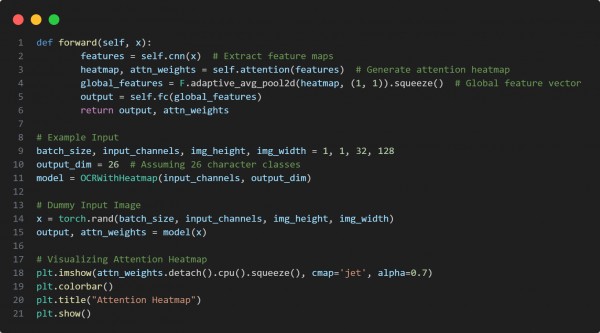

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Applies attention over CNN feature maps, creating an interpretable heatmap.

- Uses a softmax-normalized attention mask, highlighting important regions.

- Extracts global features via pooling, aiding in classification.

- Visualizes heatmaps using Matplotlib, showcasing model attention on input.

Hence, the attention mechanism enables heatmap generation by highlighting essential image regions, improving interpretability and aiding in Optical Character Recognition (OCR).

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP