The Attentive Attention Mechanism enhances answer representation by applying multiple attention layers to refine contextual relevance in a Keras-based model.

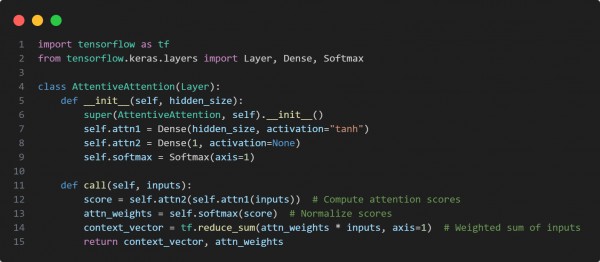

Here is the code snippet you can refer to:

In the above code snippets we are using the following techniques:

- Implements AttentiveAttention as a custom Keras Layer.

- Uses a two-layer attention network (Dense layers) for score computation.

- Applies Softmax for attention weight normalization.

- Aggregates weighted input representations to form the context vector.

- Provides a refined answer representation by emphasizing important features.

Hence, the Attentive Attention Mechanism in Keras refines answer representations by leveraging multiple attention layers for enhanced contextual understanding.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP