Adding an attention mechanism to an LSTM model in Keras enhances sequence processing by dynamically weighting relevant time steps, improving model interpretability and performance.

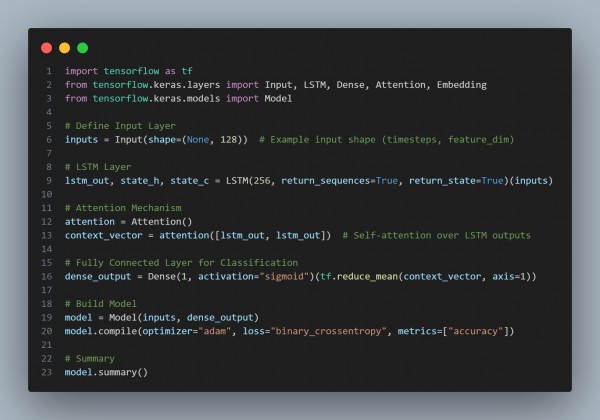

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses an LSTM Layer to process sequential data.

- Applies a Self-Attention Mechanism to emphasize key time steps.

- Computes Context Vectors by dynamically weighting LSTM outputs.

- Aggregates Important Features using a mean operation over attention.

- Uses a Dense Sigmoid Layer for binary classification.

Hence, integrating an attention mechanism into an LSTM model in Keras improves sequence learning by allowing the network to focus on the most critical time steps.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP