An attention mechanism in a spelling correction model improves accuracy by dynamically focusing on critical input character sequences, enabling better alignment between misspelled and corrected words.

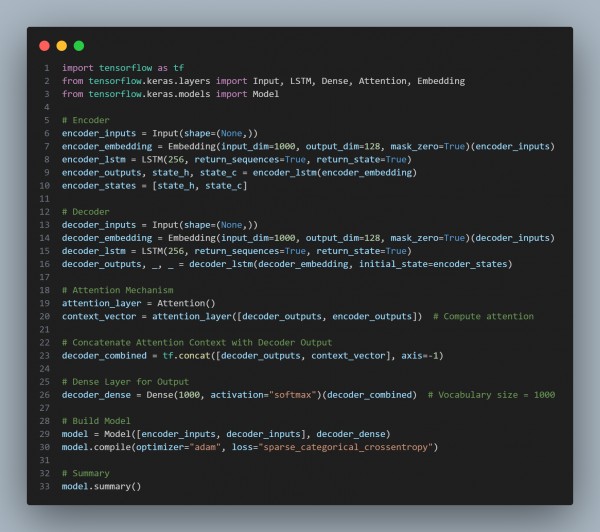

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses an Encoder-Decoder LSTM to process spelling corrections.

- Applies an Attention Mechanism to dynamically focus on key characters.

- Computes Context Vectors by weighting encoder outputs for each decoder step.

- Concatenates Attention and Decoder Outputs for better alignment in correction.

- Uses a Dense Softmax Layer to predict corrected words

Hence, attention in a spelling correction model improves alignment between input and output sequences, enhancing correction accuracy by emphasizing key parts of the misspelled word.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP