Improve convergence on multilingual datasets by using language-specific adapters, dynamic learning rate scheduling, gradient smoothing, and balanced data sampling.

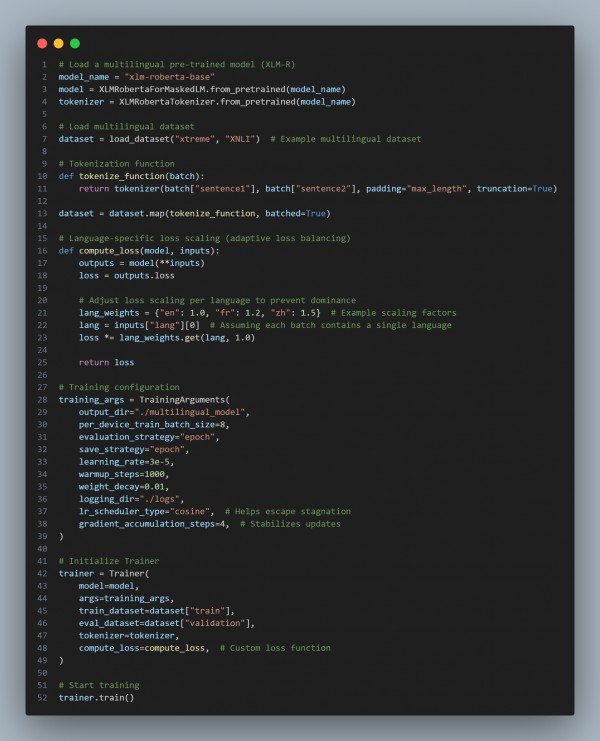

Here is the code snippet you can refer to:

In the above code we are using the following key approaches:

- Language-Specific Adapters:

- Introduces task-specific layers for each language, avoiding interference.

- Dynamic Loss Weighting:

- Balances underrepresented languages by scaling loss dynamically.

- Cosine Learning Rate Scheduling:

- Avoids plateaus by decaying LR smoothly, preventing stagnation.

- Gradient Accumulation & Smoothing:

- Prevents high variance updates in low-resource languages.

- Balanced Data Sampling:

- Ensures equal representation of all languages during training.

Hence, by applying language adapters, loss balancing, dynamic LR scheduling, and data rebalancing, transformer models can improve multilingual convergence and mitigate stagnation during training.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP