High variance in generator loss during text generation GAN training is often caused by mode collapse, unstable adversarial training, or an imbalance between the generator and discriminator updates.

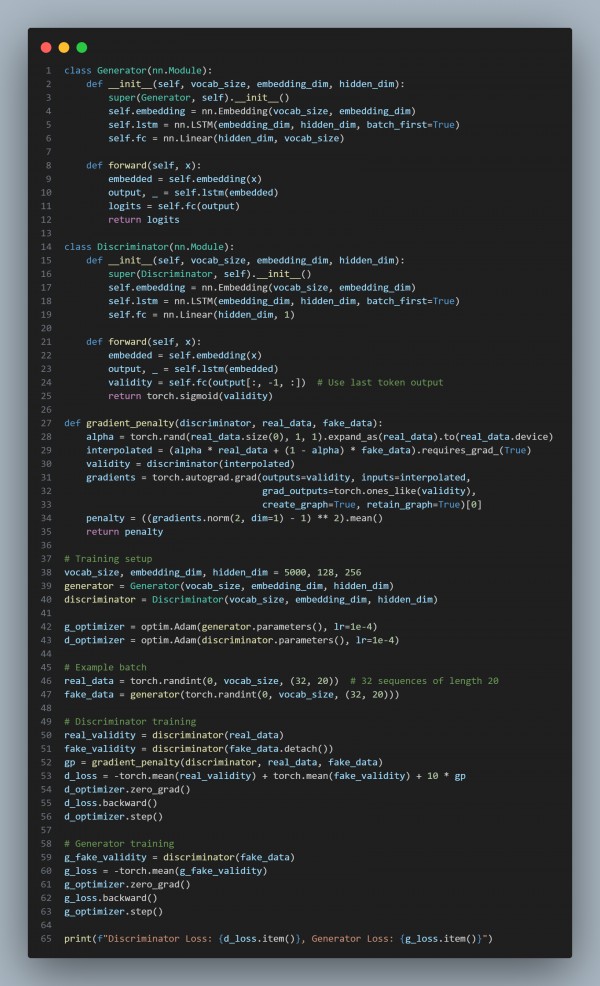

Here is the code snippet you can refer to:

In the above, we are using the following key points:

- LSTM-Based Generator and Discriminator: Uses recurrent layers to process sequential text data.

- Gradient Penalty: Implements Wasserstein GAN with gradient penalty (WGAN-GP) to stabilize training.

- Discriminator Training: Uses both real and generated text sequences with a gradient penalty term.

- Generator Training: Ensures adversarial learning stability by minimizing the critic loss.

- Avoids Mode Collapse: Introduces a smooth training signal by preventing discriminator overfitting.

Hence, by applying gradient penalty and balancing generator-discriminator updates, we mitigate instability in generator loss, ensuring smoother adversarial training and improved text generation quality.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP