To address generator instability, use techniques like pre-training, gradient clipping, and scheduled updates for balanced training dynamics in text-based GANs. You can follow the following steps:

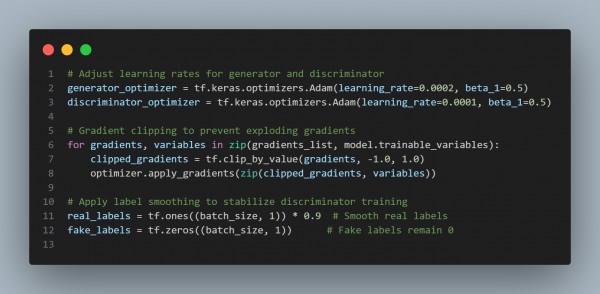

In the above code, we are using:

- Pre-training: Start with a pre-trained model to provide a stable baseline for the generator.

- Gradient Clipping: Limits gradient magnitudes to stabilize updates during training.

- Scheduled Updates: Train the generator intermittently to allow the discriminator to improve concurrently.

Hence, by referring to above, you can resolve generator instability in a GAN for text generation tasks

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP