To prevent output duplication when training a GAN for text generation, you can follow the following key points:

- Diverse Training Data: Ensure a diverse and balanced dataset to encourage varied outputs.

- Penalty for Repetition: Add loss terms (e.g., self-BLEU or n-gram penalties) to discourage repetitive outputs.

- Temperature Sampling: Lower the sampling temperature to increase randomness in token selection.

- Regularization: Apply regularization techniques like dropout to promote diversity in learned patterns.

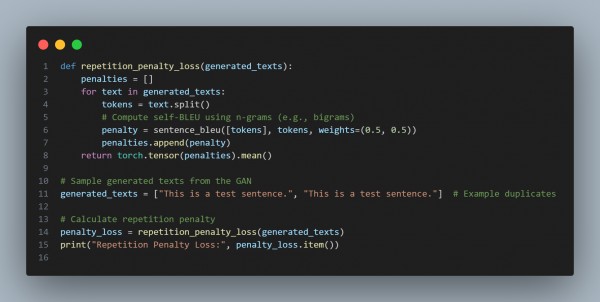

Here is the code snippet you can follow:

In the above code, we are using the following key points:

- Self-BLEU: Penalizes repetitive outputs by measuring similarity to itself.

- Diverse Sampling: Use techniques like top-k or nucleus sampling during generation.

- Regularization: Encourages the generator to explore diverse outputs during training.

Hence, these steps help reduce duplication and promote more varied and meaningful text generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP