To deploy a generative AI model offline, download an open-source model and run it locally using a framework like PyTorch or Hugging Face.

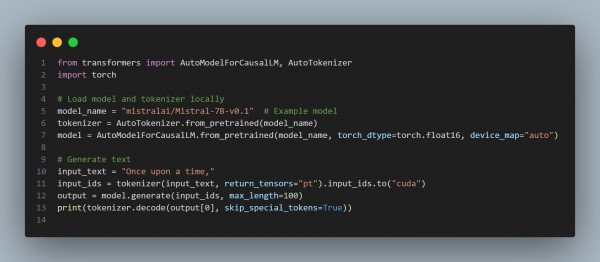

Here is the code snippet you can refer to:

In the above code we are using the following points:

- Offline Model Loading: Loads the AI model and tokenizer locally without API calls.

- Efficient Computation: Uses torch_dtype=torch.float16 and device_map="auto" for optimized performance.

- Text Generation: Takes an input prompt and generates an AI-driven response.

Hence, you can deploy and run a generative AI model completely offline by setting up a local environment, downloading model weights, and leveraging PyTorch-based execution.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP