In order to resolve NaN gradients when training GANs in PyTorch, you can follow the following steps below:

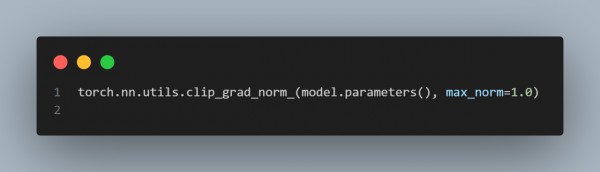

- Use Gradient Clipping

- Prevent exploding gradients by clipping them.

- Add Small Epsilon to Logarithms

- Avoid log(0) errors in loss calculations.

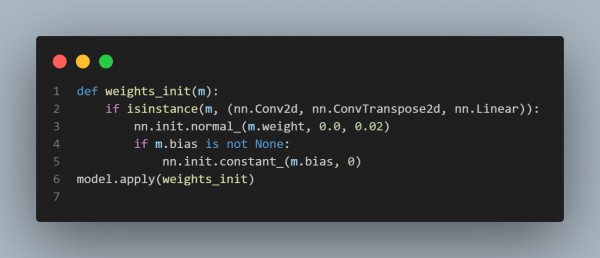

- Check Initialization

- Ensure proper weight initialization to stabilize training.

- Normalize Inputs

- Normalize input data to a standard range (e.g., [-1, 1]).

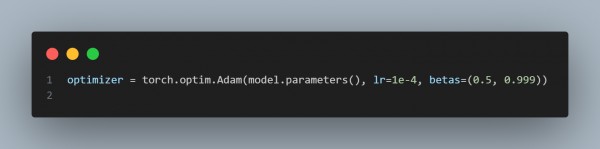

- Use Lower Learning Rates

- Reduce learning rates for better training stability.

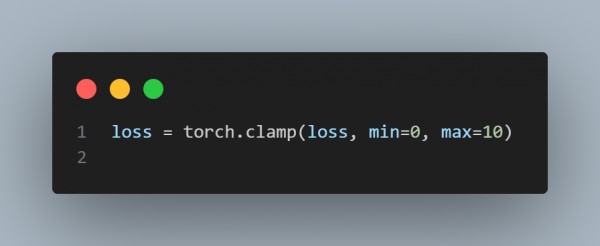

- Check Loss Functions

- Avoid excessively large losses by scaling or clipping.

- Track Gradients

- Debug gradients to identify unstable parameters.

Here are the code snippets explaining the above steps:

Hence, following these steps systematically can help identify and resolve NaN gradients in GAN training.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP