To fix model instability when training GANs on high-resolution images, you can follow the following steps:

- Progressive Growing: Start training with lower-resolution images and progressively increase the resolution.

- Use Spectral Normalization: Apply spectral normalization to the discriminator to stabilize training.

- Gradient Penalty: Use a gradient penalty (e.g., WGAN-GP) to stabilize the discriminator.

- Lower Learning Rate: Reduce the learning rate to slow down training dynamics.

- Use Batch Normalization or Instance Normalization: Normalize activations to avoid unstable gradients.

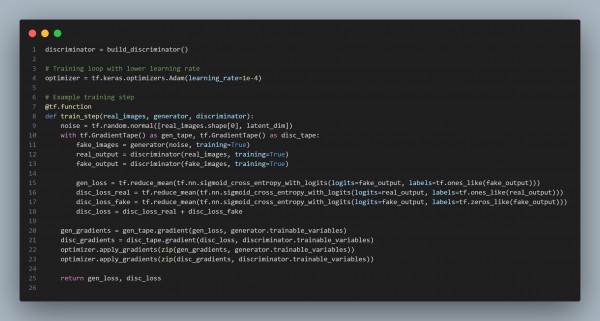

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Progressive Growing: Start with lower-resolution images and gradually increase resolution.

- Spectral Normalization: Apply spectral normalization to stabilize the discriminator.

- Gradient Penalty: Use WGAN-GP for better stability.

- Learning Rate: Reduce the learning rate during training to prevent instability.

Hence, this will help stabilize GAN training on high-resolution images by carefully controlling the generator's output and the discriminator's capacity.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP