To handle batch size adjustments when training large models in Flux, you can dynamically adjust the batch size based on available GPU memory or use gradient accumulation to simulate larger batches.

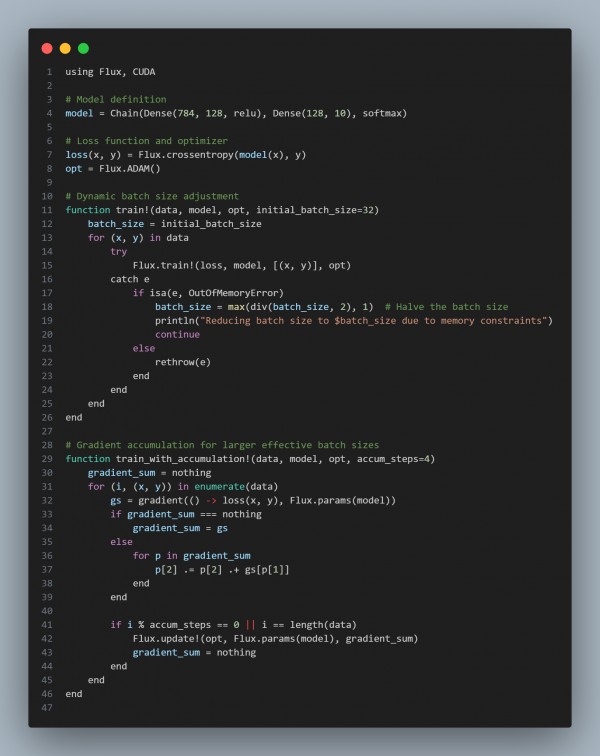

Here is the code snippet you can refer to:

In the above code, we are using the following strategies:

- Dynamic Batch Adjustment: Reduces batch size when memory limits are hit, ensuring training can continue.

- Gradient Accumulation: Simulates larger batch sizes by accumulating gradients over several smaller batches.

- Memory Efficiency: Balances memory constraints and effective batch size to optimize training.

Hence, by referring to the above, you can handle batch size adjustments when training large models in Flux.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP