Training instability in CycleGANs can be mitigated by using techniques such as:

- Gradient Penalty: Ensures smoother gradients for the discriminator.

- Learning Rate Scheduling: Adjusts the learning rate dynamically to stabilize training.

- Label Smoothing: Prevents overconfidence in discriminator predictions, reducing instability.

- Better Initialization: Ensures initial weights are set properly to avoid unstable training.

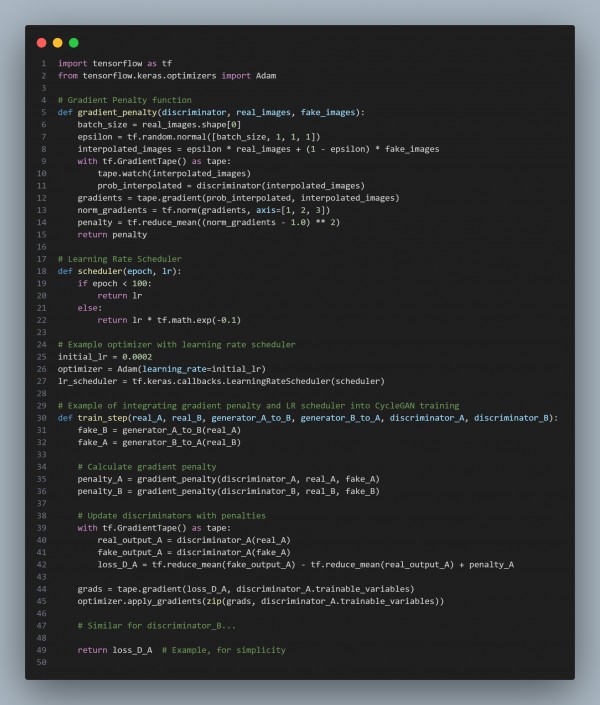

Here is an example of using gradient penalty and learning rate scheduling:

In the above code we are using the following key points:

- Gradient Penalty: Stabilizes training by penalizing large gradients, preventing mode collapse.

- Learning Rate Scheduling: Gradually decreases the learning rate after a certain number of epochs to prevent overshooting.

- Label Smoothing & Initialization: This can be added similarly to improve training stability.

Hence, these techniques help prevent the common issues of unstable training and mode collapse in CycleGANs.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP