To resolve stagnant model training in GANs used for image-to-text translation, you can follow the following key points:

- Improve Generator Initialization: Use pre-trained embeddings or initialize the generator with a meaningful latent space.

- Adjust Learning Rates: Use adaptive optimizers like Adam with tuned learning rates for both generator and discriminator.

- Scheduled Training: Alternate training steps between the generator and discriminator to avoid imbalance.

- Regularization: Add noise to the discriminator input or apply dropout to improve generalization.

- Attention Mechanisms: Incorporate attention layers to focus on key features for better translation.

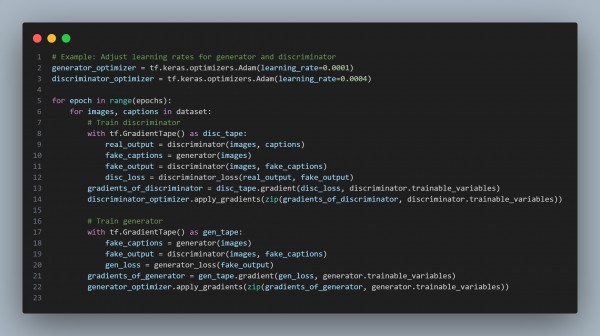

Here is the code snippet you can follow:

In the above code, we are using the following key points:

- Attention Mechanisms: Improves focus on critical features for image-to-text mapping.

- Scheduled Training: Balances generator and discriminator updates.

- Regularization: Enhances stability and prevents overfitting.

Hence, these strategies help overcome stagnant training and improve performance in GANs for image-to-text translation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP