Contrastive Divergence, or (CD) plays an important role in fine-tuning generative image models.

- It is used to approximate the gradients of the log-likelihood for training generative models like Restricted Boltzmann Machines (RBMs).

- It fine-tunes generative image models by adjusting weights to reduce the difference between the model's generated and real data distributions.

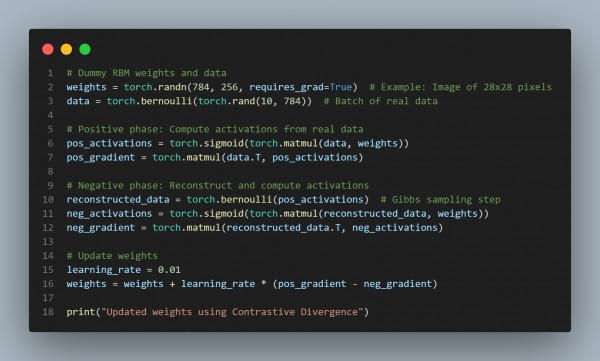

Here is the code snippet you can refer to:

In the above code, we have used the positive phase to sample from the real data distribution, the negative phase to ample from the model’s current distribution, and Updated weights using the difference.

Here given below are the roles of CD:

- Efficient Training: Simplifies computation of gradients for generative models.

- Improves Representations: Aligns generated images closer to real ones.

- Stable Convergence: Fine-tunes the model effectively without full MCMC sampling.

Hence, this is why contrastive divergence plays an important role in fine-tuning generative image models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP