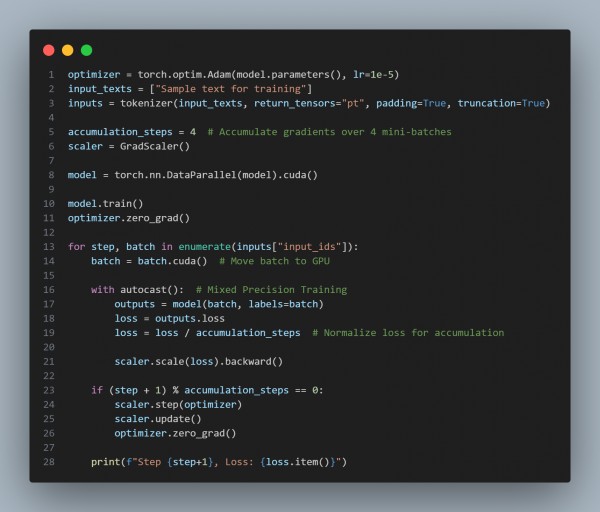

You can improve efficiency when training or fine-tuning generative models by referring to following code snippet:

The reference code is using techniques like Gradient Accumulation , Mixed Precision Training and Data Parallelism.

Hence this combined approach will not only enhance training efficiency but also helps maintain the model's integrity giving more relevant outputs during inference leading to more reliable and responsible generative model deployment.

Check out our Balancing model size and accuracy in fine-tuned generative models post.

Related Post: How to ensure stable training of generative models

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP