Hyperparameter search automates tuning of critical parameters (e.g., learning rate, batch size, latent space dimensions) to improve model performance and stability.

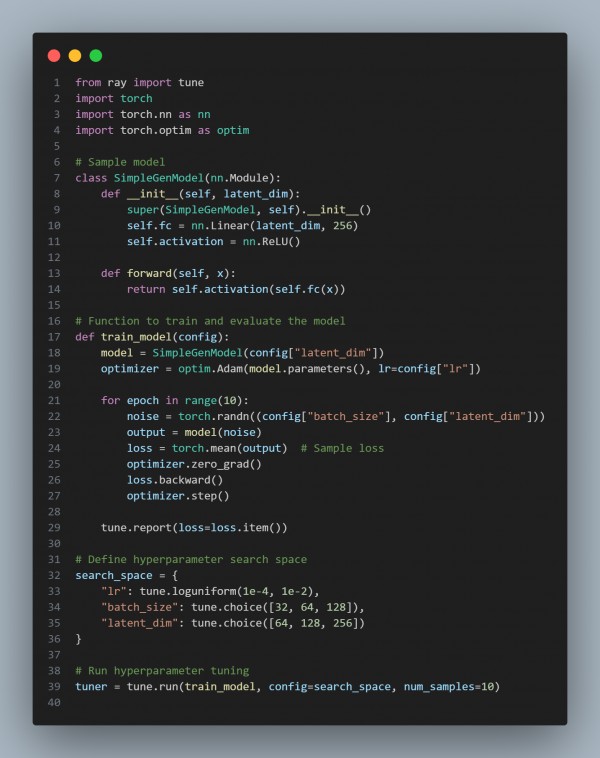

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Automated Hyperparameter Optimization: Uses Ray Tune for efficient parameter tuning.

- Explores Learning Rate, Batch Size, Latent Space: Optimizes generative model performance.

- Parallelized Search: Speeds up training across multiple configurations.

- Prevents Overfitting & Mode Collapse: Fine-tunes settings to improve stability in GANs.

- Scalable for Large Models: Adapts to different neural network architectures.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP