You can use Byte-Pair Encoding (BPE) to train a tokenizer for a new foundation model by tokenizing a corpus, applying subword merging, and saving the learned vocabulary.

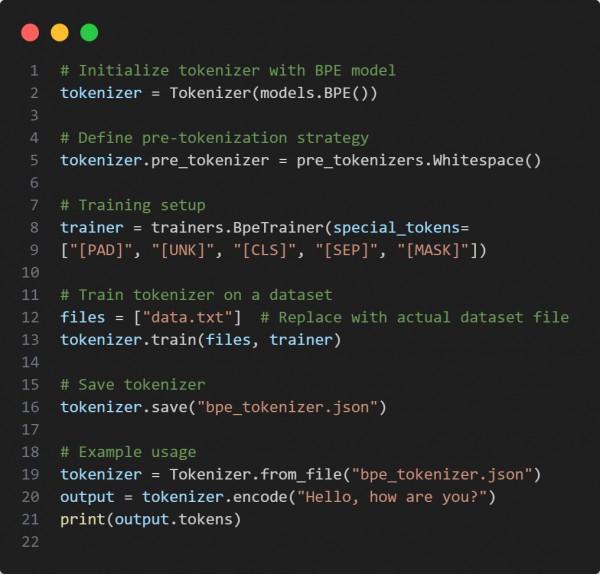

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

-

BPE Model (models.BPE()): Uses subword merging for efficient tokenization.

-

Whitespace Pre-tokenization (pre_tokenizers.Whitespace()): Splits words before BPE encoding.

-

Trainer (trainers.BpeTrainer()): Learns token merges from the dataset.

-

Serialization (tokenizer.save()): Saves the tokenizer for later use.

Hence, BPE-based tokenization improves language model efficiency by handling rare words through subword segmentation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP