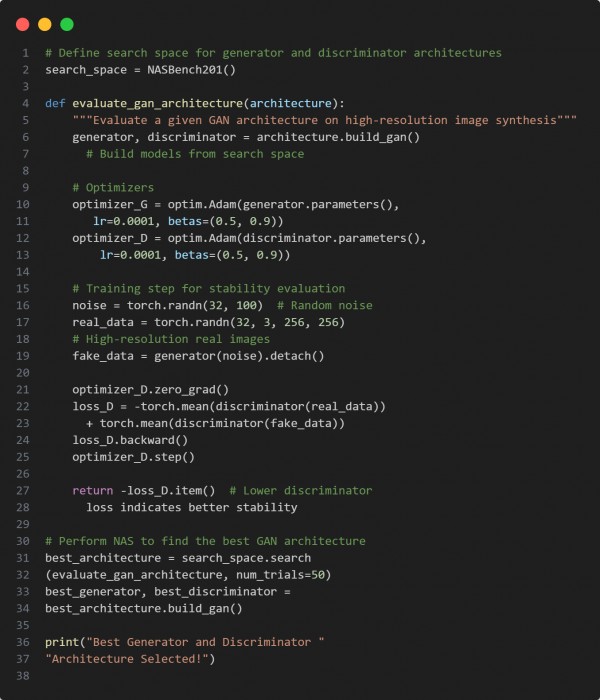

Use Neural Architecture Search (NAS) to optimize GAN performance by automating the selection of generator and discriminator architectures for high-resolution image synthesis. Here is the code snippet you can refer to:

In the above code, we are using the following key approaches

-

Automated Architecture Search: Uses NAS to optimize GAN structures dynamically.

-

Performance-Based Selection: Evaluates architectures based on discriminator loss and training stability.

-

High-Resolution Training: Targets 256×256 image synthesis for improved realism.

-

Scalable Search Space: Can extend to larger architectures for enhanced image fidelity.

Hence, by leveraging NAS, we can systematically discover optimal GAN architectures, boosting performance and stability in high-resolution image generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP