The Maluuba seq2seq model integrates an attention mechanism to dynamically focus on relevant encoder states while generating each decoder output.

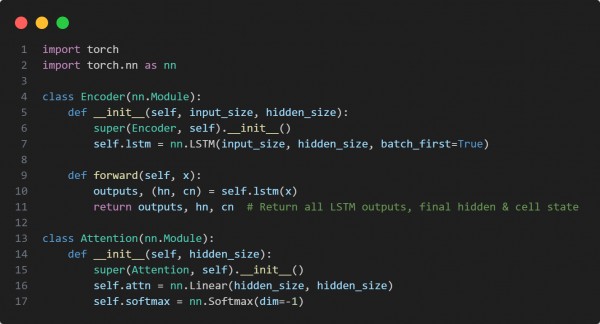

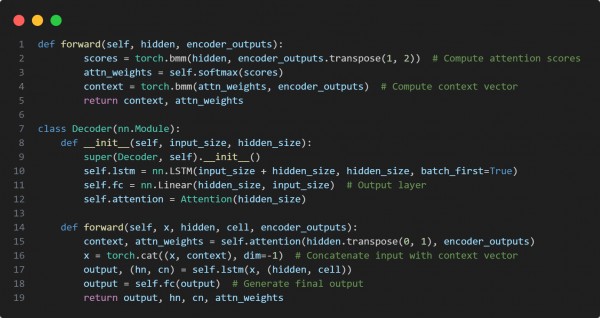

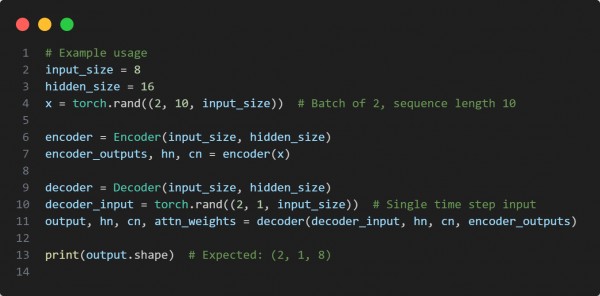

Here is the code snippet you can refer to:

In the above code snippets we are using the following techniques:

- Implements an LSTM-based Encoder to process input sequences.

- Defines an Attention layer that computes soft attention over encoder outputs.

- Uses an LSTM Decoder that dynamically attends to encoder states.

- Concatenates the context vector with the decoder input at each step.

- Outputs predictions and attention weights for sequence generation.

Hence, the Maluuba seq2seq model effectively integrates attention, improving translation and sequence generation by dynamically focusing on relevant past states.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP