Yes, the attention mechanism can be applied to feedforward neural networks by incorporating self-attention layers to dynamically weigh input features based on their relevance.

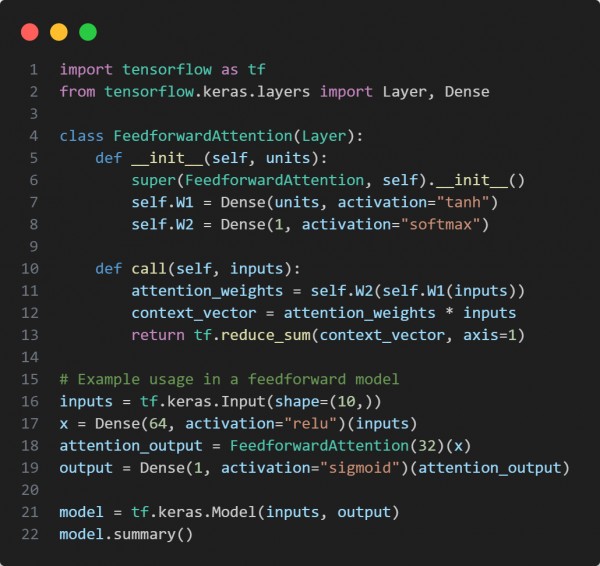

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses trainable dense layers to compute attention scores.

- Applies softmax to determine feature importance.

- Aggregates weighted inputs for improved decision-making.

Hence, integrating attention into feedforward networks enhances their ability to capture dependencies within input features dynamically.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP