The Transformer model's attention mechanism handles differing sequence lengths using padding masks and causal masks to properly weight or ignore certain positions during attention computation.

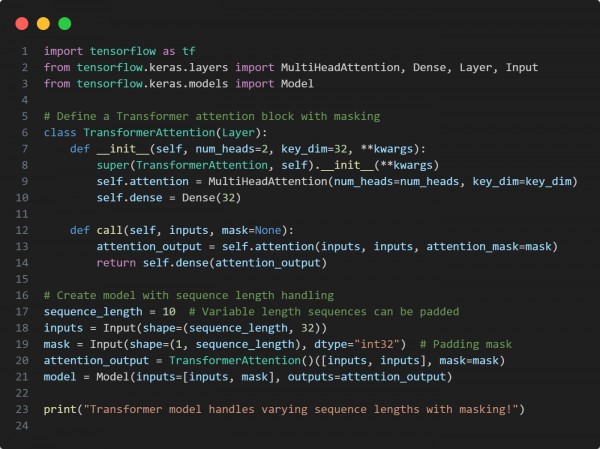

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Uses MultiHeadAttention with an attention_mask to handle sequence variations.

- Implements a Transformer block that adapts to different sequence lengths.

- Accepts a padding mask to ignore padded tokens in the input.

Hence, the Transformer's attention mechanism ensures correct processing of varying sequence lengths using padding and causal masks.

Related Post: long-term dependencies in sequence generation with transformer-based models

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP