The challenges of Integrating Symbolic Reasoning with Generative Language Models are as follows:

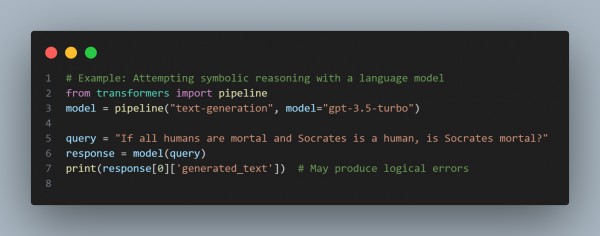

- Symbolic Knowledge Representation: Generative models struggle with representing structured symbolic rules or knowledge (e.g., logic, ontologies). You can refer to the code snippet showing how:

-

Consistency in Reasoning: Models often produce inconsistent outputs when reasoning over multiple steps.

-

Data Alignment: Training requires aligned datasets combining natural language and symbolic logic.

-

Efficiency and Scalability: Symbolic reasoning frameworks (like Prolog) are computationally expensive and hard to integrate with large language models.

-

Explainability: Generative models act as black boxes, making it difficult to trace symbolic reasoning paths.

These challenges include representational alignment, consistency, and efficiency. Hybrid architectures or neuro-symbolic systems can help bridge the gap.

Hence, these are the challenges of integrating symbolic reasoning with generative language models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP