To generate aspect-aware embeddings in Aspect-Based Sentiment Analysis (ABSA) using a pre-trained RoBERTa model, integrate an attention mechanism that dynamically weighs contextual word representations based on aspect importance.

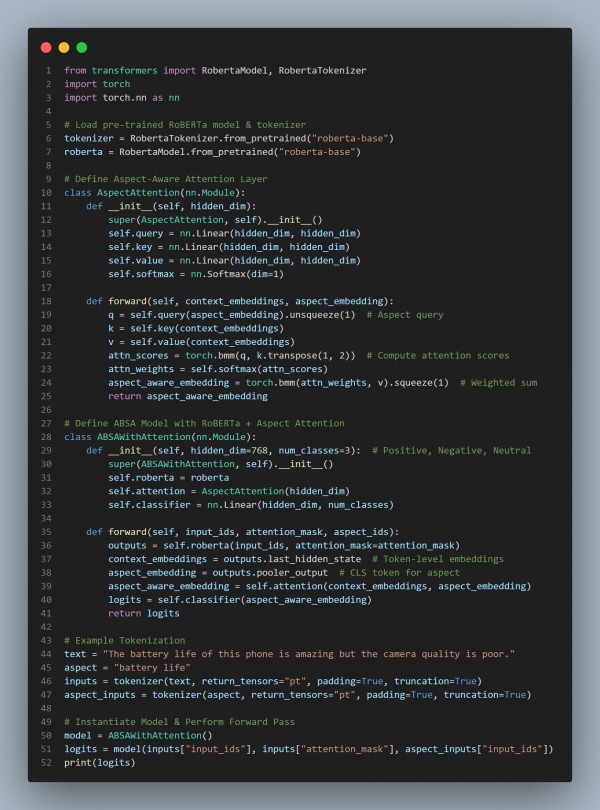

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses Pre-trained RoBERTa Model for contextual embeddings.

- Implements Aspect-Aware Attention to focus on relevant text portions.

- Dynamically Weighs Context using query-key-value attention mechanism.

- Generates Aspect-Specific Representations before classification.

Hence, integrating an attention mechanism with a RoBERTa model enables aspect-aware embeddings by dynamically focusing on context words relevant to the given aspect, improving ABSA performance.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP