The attention mechanism is not just looking back at memory but dynamically weighting past information, allowing the model to selectively focus on the most relevant parts of the input rather than treating all past data equally.

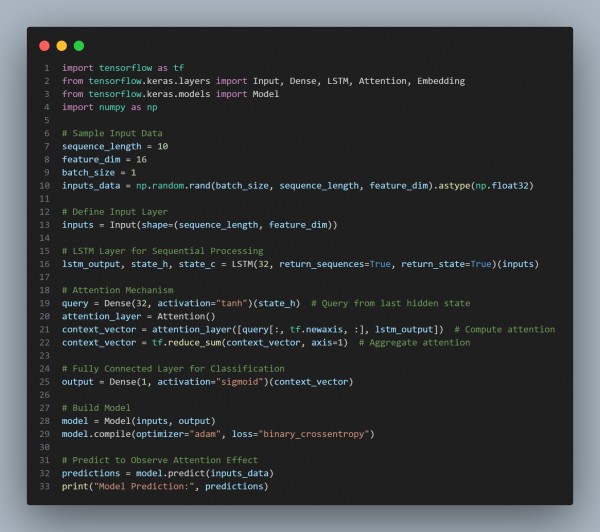

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses LSTM to Process Sequential Data and generate hidden states.

- Applies an Attention Mechanism to dynamically focus on key time steps.

- Computes Query-Based Attention Scores instead of uniformly looking back at all memory.

- Aggregates Important Features using weighted context extraction.

- Outputs a Final Classification Decision after attention-based feature selection.

Hence, attention is more than just revisiting memory; it selectively emphasizes relevant information, improving model interpretability and decision-making.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP