The best way to split a dataset while preserving the target distribution in Scikit-learn is by using Stratified Sampling (StratifiedShuffleSplit or train_test_split with stratify), ensuring that the class proportions remain balanced in both training and testing sets.

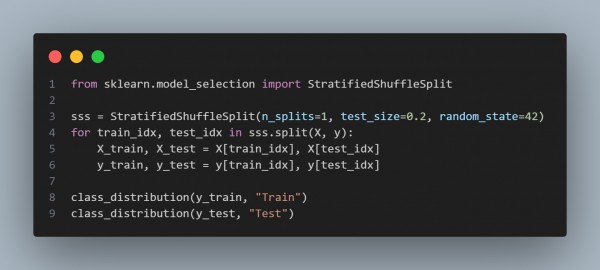

Here is the code snippet given below:

In the above code we are using the following techniques:

-

Uses train_test_split with stratify=y

- Ensures that the distribution of target classes in the original dataset is preserved in both training and testing sets.

-

Prevents Data Imbalance Issues:

- Especially useful for imbalanced datasets where one class has significantly fewer instances than others.

-

Applicable to Multi-Class and Binary Classification:

- Works well for datasets with multiple classes (e.g., Iris dataset) and binary labels.

-

Random Seed for Reproducibility (random_state=42)

- Ensures that the same split can be reproduced across different runs.

Hence, using stratified sampling (train_test_split with stratify=y or StratifiedShuffleSplit) ensures that the training and testing sets maintain the same class distribution as the original dataset, preventing bias in model evaluation.

Generative AI uses machine learning to create new content, enhancing automation and innovation. A Gen AI certification teaches essential skills to develop AI-powered solutions for industries like marketing, design, and software development.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP