The best way to reduce bias in AI-generated text is by using Counterfactual Data Augmentation (CDA), which involves modifying training data with alternative perspectives to ensure fairness and reduce model bias.

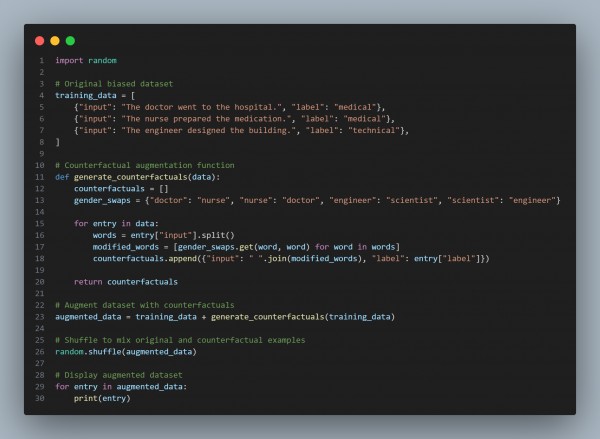

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Bias Mitigation – Uses Counterfactual Data Augmentation (CDA) to create diverse perspectives.

- Gender-Swap Technique – Replaces biased terms (e.g., "doctor" ↔ "nurse") to balance representation.

- Preserves Labels – Maintains consistency by ensuring counterfactual examples remain in the same category.

- Data Expansion – Augments training data, leading to a more generalized and fairer model.

- Randomization – Shuffles data to prevent unintended biases from order effects during training.

Hence, Counterfactual Data Augmentation (CDA) enhances fairness in AI models by diversifying training data, reducing stereotype biases, and ensuring balanced representation in text generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP