Fine-tuning the Code-Bison model involves preparing a task-specific dataset, configuring Vertex AI, and running the fine-tuning job. Here is the steps you can follow:

- Prepare Dataset: Format your dataset in a supported format (e.g., JSONL with input-output examples for code generation tasks).

- Set Up Vertex AI: Use Google Cloud SDK to configure Vertex AI and specify training parameters.

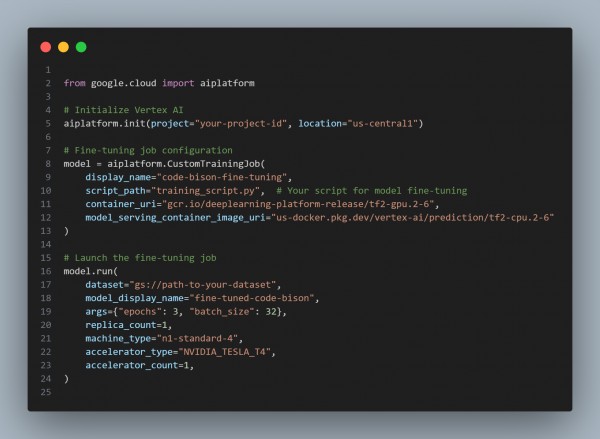

- Fine-Tuning Job: Use Vertex AI’s fine-tuning API.

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Dataset Preparation: Ensure a high-quality dataset with clear input-output mappings for fine-tuning.

- Custom Training Script: Use TensorFlow/PyTorch to define how the model is trained on your dataset.

- Vertex AI Configuration: Optimize hardware and job parameters for your specific task.

- Model Deployment: Deploy the fine-tuned model on Vertex AI endpoints for serving.

Hence, by referring to the above, you can fine-tune the Code-Bison model using Google Vertex AI for specific tasks like code generation.

Related Post: How to optimize hyperparameters for fine-tuning GPT-3/4

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP