Capture idiomatic expressions effectively by augmenting training data with diverse idiomatic samples, using contrastive learning, and incorporating phrase-aware embeddings.

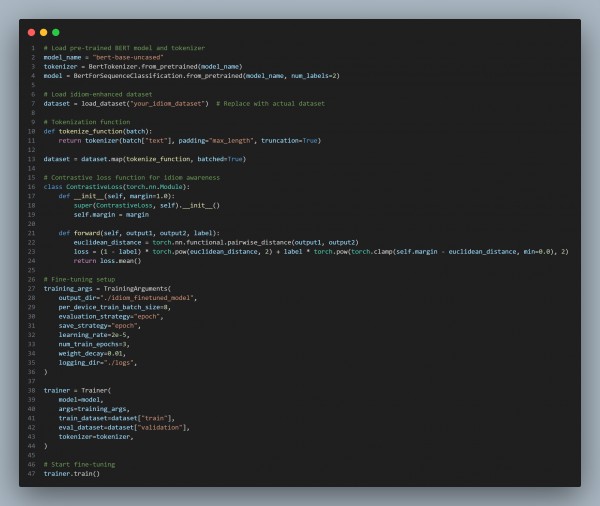

Here is the code snippet you can refer to:

In the above code we are using the following key approaches:

- Idiom-Enriched Data Augmentation:

- Expands training data with diverse idiomatic expressions and their contextual meanings.

- Contrastive Learning:

- Uses a contrastive loss function to differentiate idiomatic vs. literal meanings.

- Phrase-Aware Embeddings:

- Enhances token representations to recognize multi-word idioms.

- Contextualized Pretraining:

- Fine-tunes BERT or GPT models on idiom-heavy corpora to improve comprehension.

Hence, by applying contrastive learning, idiom-specific data augmentation, and phrase-aware embeddings, the language model effectively captures idiomatic expressions, improving contextual understanding during fine-tuning.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP