Optimize model parallelism by using pipeline parallelism, tensor parallelism, gradient checkpointing, and mixed precision training to reduce GPU memory usage.

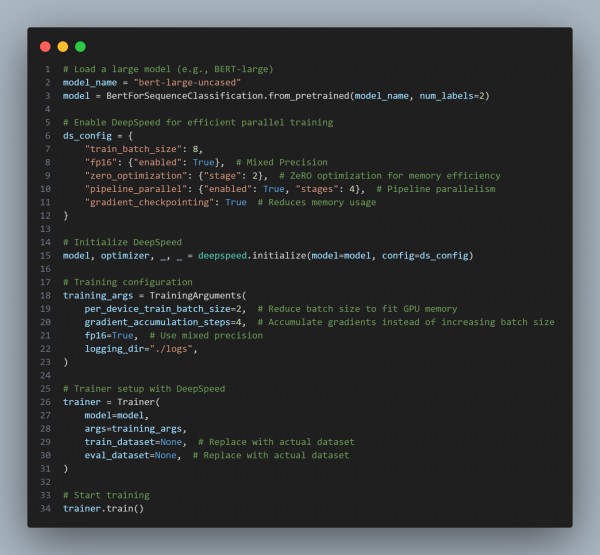

Here is the code snippet you can refer to:

In the above code we are using the following key approaches:

- Pipeline Parallelism:

- Splits the model across multiple GPUs to distribute memory usage efficiently.

- Tensor Parallelism:

- Partitions individual tensors across GPUs to reduce per-GPU memory requirements.

- Gradient Checkpointing:

- Saves intermediate activations instead of recomputing them, reducing memory load.

- Mixed Precision Training (FP16):

- Uses half-precision floating points, significantly lowering GPU memory usage.

- ZeRO Optimization (DeepSpeed):

- Reduces redundant memory copies for gradients and optimizer states.

Hence, by leveraging pipeline parallelism, tensor parallelism, gradient checkpointing, and mixed precision training, large-scale models can be trained efficiently without running out of GPU memory.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP