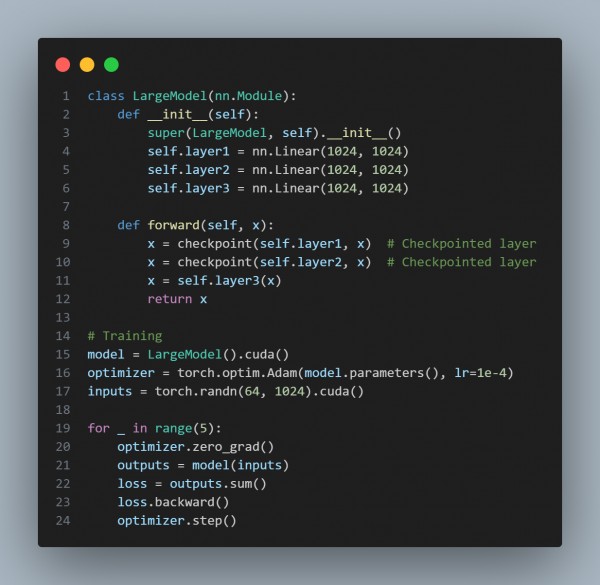

In order to implement gradient checkpointing to manage memory during large model training then refer to the code snippet below:

In this code, gradient checkpointing is implemented by "checkpoint" on "self.layer1" and "self.layer2" in the forward method. This means that during the forward pass, the intermediate activities for this layer are not stored, saving memory,Recomputed during backpropogation to calcaulate the gradient.

Hence, using checkpoints on selected layers will help in saving memory during large model training.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP