Managing dataset biases during Generative AI model training involves identifying, mitigating, and monitoring biases to ensure fair and unbiased model outputs. Here is the following steps you can follow:

- Data Preprocessing: Balance datasets by removing or augmenting underrepresented samples.

- Bias Detection: Use metrics to identify biases in the training data and model outputs.

- Regularization: Incorporate fairness constraints or adversarial debiasing techniques.

- Post-Processing: Adjust outputs to reduce bias after model inference.

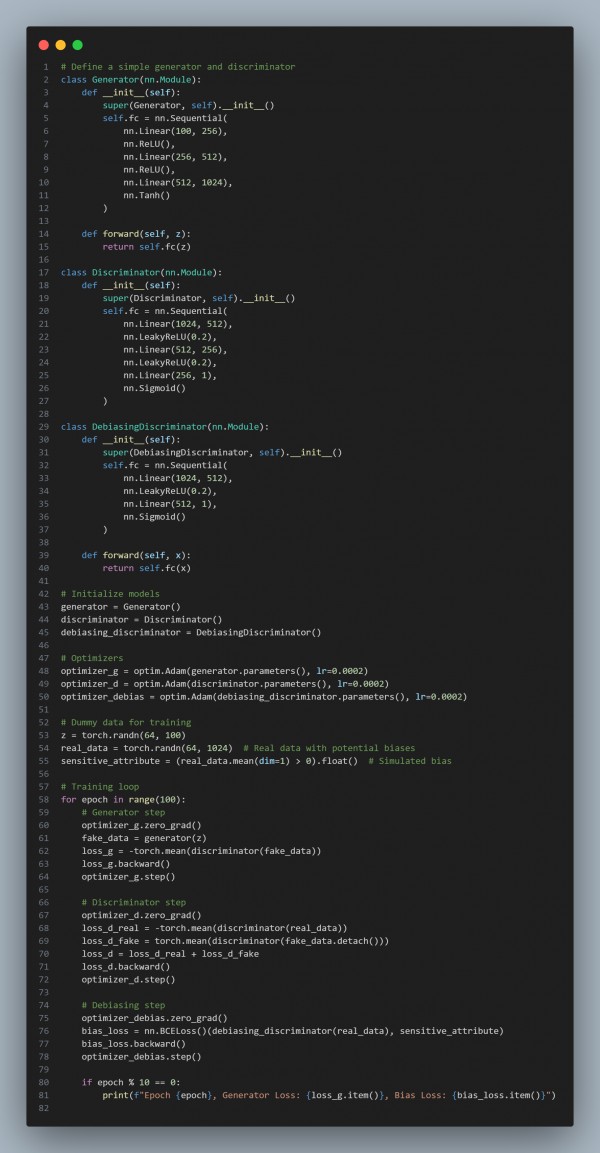

Here is the code snippet you can refer to:

In the above code we are using the following:

- Adversarial Debiasing: Use an auxiliary model to penalize the generation of biased outputs.

- Balanced Datasets: Augment or curate data to ensure diversity.

- Bias Metrics: Regularly monitor fairness using statistical or machine-learning fairness metrics.

Hence, by proactively addressing dataset biases, Generative AI models can produce fairer and more inclusive outputs.

Related Post: bias in generative AI models

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP