To debug encoder-decoder bottlenecks in image captioning models for complex scenes, analyze attention maps, gradient flow, and feature activations using visualization techniques and layer-wise relevance propagation.

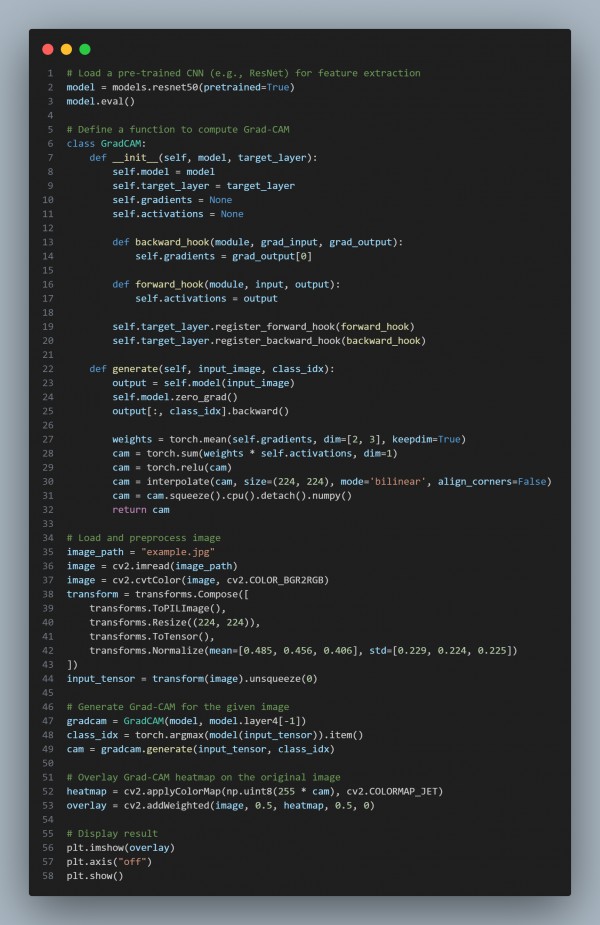

Here is the code snippet you can refer to:

In the above, we are using the following key points:

- Model Selection: Uses a pre-trained ResNet50 as the encoder for feature extraction.

- Grad-CAM Implementation: Captures activations and gradients from a target layer to analyze feature importance.

- Image Preprocessing: Normalizes and resizes the input image to match the model's expected format.

- Heatmap Generation: Computes Grad-CAM and overlays the attention heatmap on the original image.

- Visualization: Displays the overlayed heatmap to identify bottlenecks in scene understanding.

Hence, by leveraging Grad-CAM visualization, we can effectively debug encoder-decoder bottlenecks in image captioning models, helping to improve attention mechanisms for complex scenes.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP