You can use tf.GradientTape computes custom losses for generative models by recording operations and applying gradients manually.

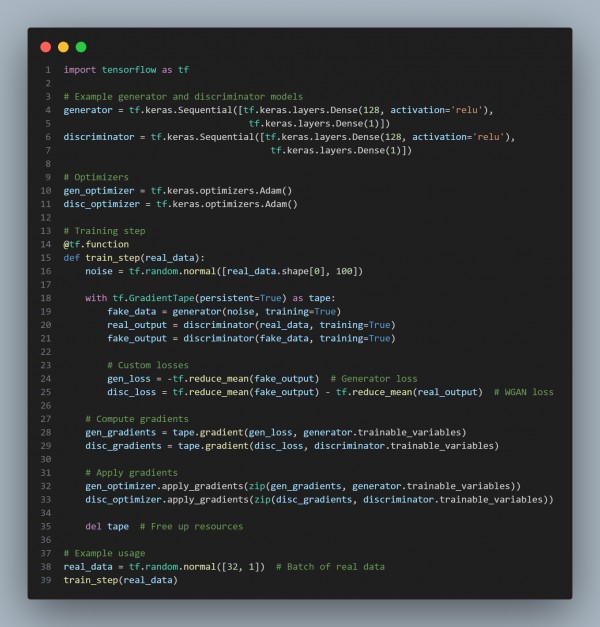

Here is the code snippet showing the implementation of GAN:

In the above code, we are using the following steps:

- Model Definitions: The generator and discriminator are simple sequential models for generating and evaluating data.

- Optimizers: Adam optimizers are used to update the generator and discriminator weights.

- Custom Losses: Generator loss encourages generating data that fools the discriminator, while the discriminator loss separates real and fake data using the Wasserstein GAN loss.

- GradientTape: Tracks operations to compute gradients for both the generator and discriminator losses.

- Training Step: Gradients are calculated and applied to update model parameters, enabling both models to improve during training.

Hence, you can apply gradient tape in TensorFlow to compute custom losses for generative models by referring to this.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP